The Ultimate Stack for Serverless & Cloud-Native Developers in 2025 ⚡️

The goal of serverless and cloud-native has always been to “Build, Ship, and Scale faster” without overthinking about infrastructure.

And with more companies going all-in on AWS, Google Cloud and Azure (Datadog 2023 report), the demand for the right tech stack has never been higher.

But with so many tools out there, choosing the perfect stack can be overwhelming. That’s why I’ve curated 7 Tools you should use to build, deploy, and scale cloud-native applications effortlessly.

Let’s start!

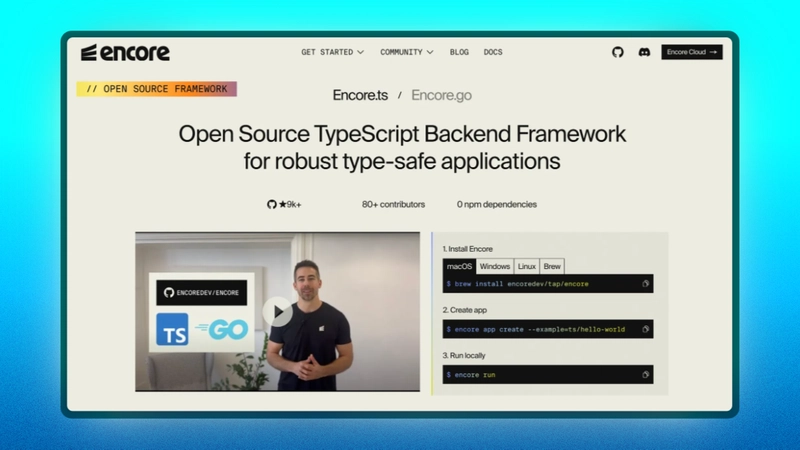

Encore – The Backend Framework Designed for Cloud-Native Developers

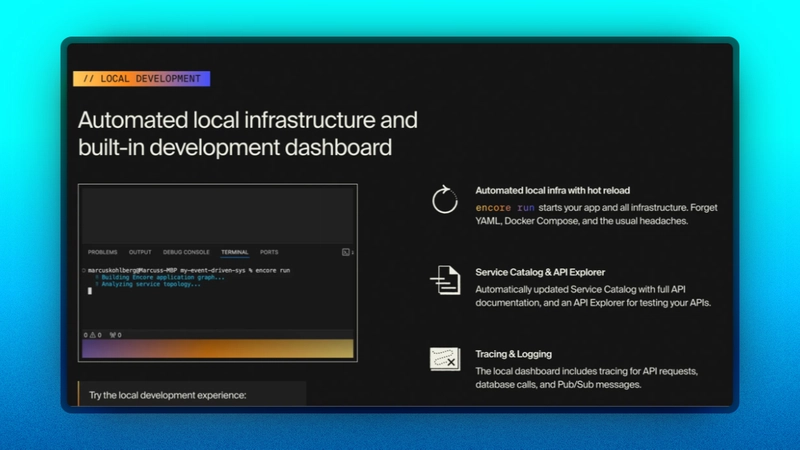

Encore is a cloud-native backend framework for type-safe applications that lets you build, test, and deploy services without manually managing any infrastructure.

This framework is perfect for Go and TypeScript developers because it lets you focus on writing your project’s code and logic while it automatically generates a cloud infrastructure.

The generated infrastructure runs and monitors your project, making the whole deployment process super easy and stress-free.

Also, it simplifies the process of building a distributed system.

If this does not convince you to try out Encore, here are a few interesting features I noticed that might:

- Encore generates and updates documentation for APIs and services created for your system.

- Encore helps developers focus on the application logic and ensure they follow best practices for authentication, service-to-service communication, tracing, etc.

- Also, Encore offers an optional Cloud platform for automating DevOps processes on AWS and GCP.

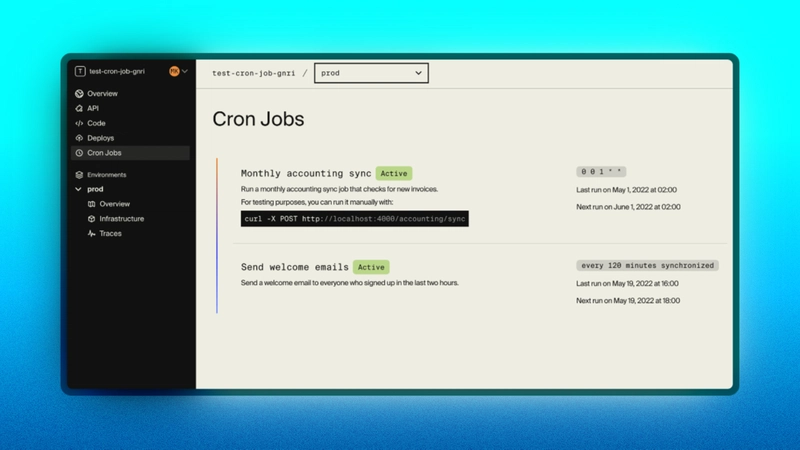

For periodic and recurring tasks, Encore.ts provides a declarative way of using Cron Jobs. With this, you don’t need to maintain infrastructure, as Encore manages scheduling, monitoring, and execution of Cron Jobs.

Here’s an Example of how easily you can define a **Cron Job:**

import { CronJob } from "encore.dev/cron";

import { api } from "encore.dev/api";

// Send a welcome email to everyone who signed up in the last two hours.

const _ = new CronJob("welcome-email", {

title: "\"Send welcome emails\","

every: "2h",

endpoint: sendWelcomeEmail,

})

// Emails everyone who signed up recently.

// It's idempotent: it only sends a welcome email to each person once.

export const sendWelcomeEmail = api({}, async () => {

// Send welcome emails...

});

Once deployed, Encore automatically registers the Cron Job and executes it as scheduled.

You can check your Cron Job Executions in the Encore Cloud dashboard:

If you’re working with Go or TypeScript and want to focus on development, without thinking about manual infrastructure management, Encore is built for you.

Try Encore today and build faster, smarter, and stress-free.

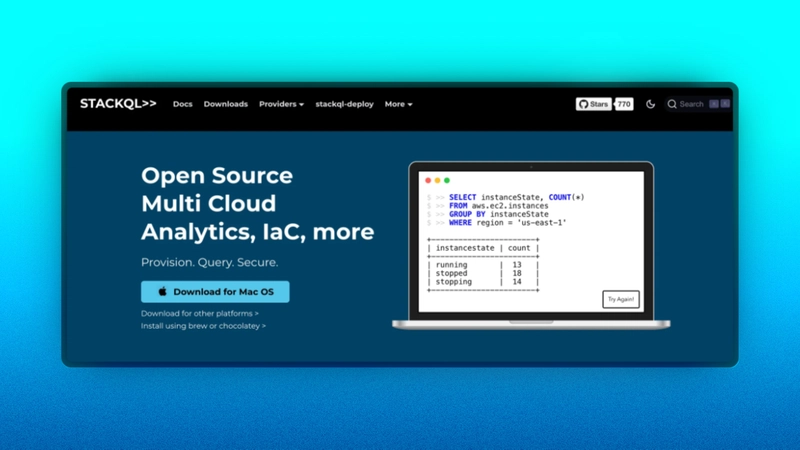

StackQL – SQL Interface for Cloud Infrastructure

Once your app is up and running, the next challenge is managing infrastructure programmatically. That’s where Infrastructure as Code (IaC) tools like StackQL come in.

If you are an SQL nerd, you will fall in love with StackQL and its unique, familiar SQL syntax. It makes infrastructure management look as easy as writing database queries.

StackQL is the first tool I came across that uses SQL commands to manage cloud infrastructures. It uses SQL commands to create, query, and manage cloud infrastructure on providers like AWS, GCP, and Azure.

https://www.youtube.com/watch?v=YuEmv58Ap18

With StackQL, you can build cloud infrastructure, such as a database, generate reports or query resources without needing to learn a scripting language or a cloud-specific SDK.

You can install StackQL with a single command:

brew install stackql

When building infrastructures with StackQL, you can create virtual machines on Google Cloud resources using SQL queries:

INSERT INTO google.compute.instances (

project,

zone,

data__name,

data__machineType,

data__networkInterfaces

)

SELECT

'my-project',

'us-central1-a',

'my-vm',

'n1-standard-1',

'[{"network": "global/networks/default"}]';

This command creates virtual machines in Google Cloud with your specified project name, zone, machine type, and network interface.

You can also use StackQL to generate reports by querying cloud infrastructure resources.”

SELECT

project,

resource_type,

SUM(cost) AS total_cost

FROM

billing.cloud_costs

WHERE

usage_start_time >= '2025-03-01'

AND usage_end_time <= '2025-03-31'

GROUP BY

project, resource_type

ORDER BY

total_cost DESC;

Besides the cool fact that StackQL lets you use SQL queries to manage resources on the cloud, here are two other unique features I noticed when testing out StackQL:

- StackQL makes infrastructure management super easy by automating compliance checks and generating cost and security reports.

- It integrates data from cloud resources into your dashboard using SQL queries like pipelines, allowing you to audit the resource without needing to use a cloud console.

StackQL lets you work your way, declaratively or procedurally, without managing state files. Its simple SQL-based syntax makes it easy to integrate with other IaC and cloud-native tools.

Use StackQL to deploy infrastructure, run cloud asset reports, check compliance, detect configuration drift, and more.

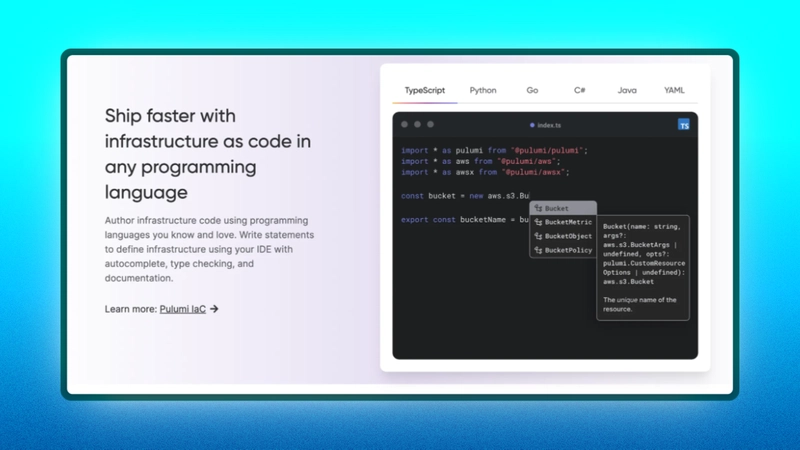

Pulumi – Modern Infrastructure as Code

Pulumi is a modern Infrastructure-as-Code (IaC) tool for handling your serverless application on the cloud. It acts as a cloud SDK that lets you define infrastructure using real variables, functions, classes, loops, etc., from a programming language of your choice.

Pulumi lets you manage your cloud infrastructure, such as servers, databases, and networks, using code. It does this automatically rather than manually configuring it through the cloud provider.

You can create an S3 bucket in AWS using Pulumi:

import * as pulumi from "@pulumi/pulumi";

import * as aws from "@pulumi/aws";

// Create an S3 bucket

const bucket = new aws.s3.Bucket("my-bucket", {

acl: "private",

});

// Export the bucket name

export const bucketName = bucket.id;

You can also deploy Kubernetes pods that run a Nginix container using Pulumi. This lets you define the state of your infrastructure and make the deployment easy.

import * as pulumi from "@pulumi/pulumi";

import * as k8s from "@pulumi/kubernetes";

// Create a Kubernetes Pod

const pod = new k8s.core.v1.Pod("my-pod", {

metadata: {

name: "example-pod",

},

spec: {

containers: [

{

name: "nginx",

image: "nginx:latest",

ports: [

{

containerPort: 80,

},

],

},

],

},

});

// Export the name of the pod

export const podName = pod.metadata.name;

So, why should you use Pulumi?

- Pulumi allows you to build your infrastructure using programming languages such as Go, Python, TypeScript, C#, and Java.

- When defining cloud resources in Pulumi, it sets up needed resources like a virtual machine or storage bucket, so you don’t have to use the dashboard of the color provider.

- Pulumi lets you create reusable components when setting up your infrastructure as a code and lets you build CI/CD pipelines natively.

- This is probably why I love Pulumi; it works well with any cloud provider, so it isn’t limited and is perfect for any dynamic cloud environment.

Pulumi is best useful when you need to avoid YAML or JSON hell when building a cloud infrastructure, when you need to reuse pipelines or components in your infrastructure, or when you want your infrastructure code to work alongside your application logic code for better understanding or cohesion.

Now that your infrastructure is set up, you need to automate continuous integration and continuous delivery (CI/CD) pipelines and manage workflows to deploy and manage your serverless application.

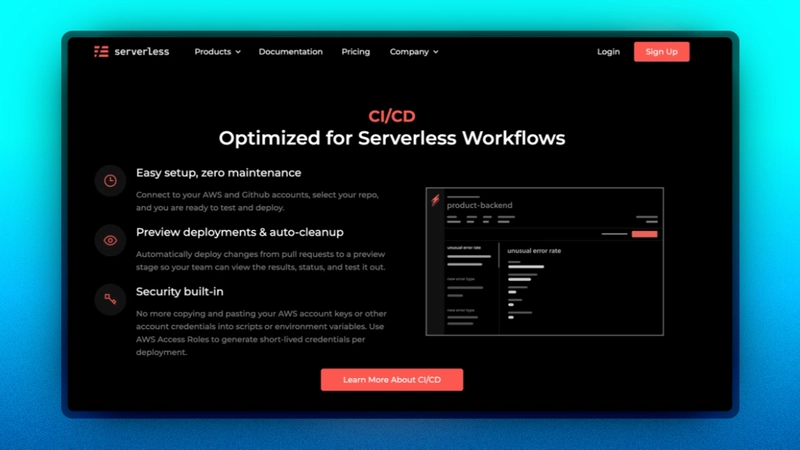

Serverless Framework – The Battle-Tested Classic

Picture a platform that lets you auto-scale your applications on AWS regardless of the programming language you use. This platform exists to make scalability and performance management super easy for you and gives you the freedom to focus on building your awesome applications.

The serverless framework is THAT tool. It’s a framework for building and deploying serverless applications on cloud providers like AWS, Azure, Google Cloud, etc. When this tool was built, it was meant for AWS Lambda, but over time, it got support from other providers.

The Serverless framework lets you define YAML configuration as a simple service with a function

service: my-service

provider:

name: aws

runtime: nodejs14.x

functions:

hello:

handler: handler.hello

events:

- http:

path: hello

method: get

And you can also test your functions locally:

serverless invoke local --function hello

You can also deploy your serverless applications:

serverless deploy

Why should you use this tool?

- The serverless model offers developers a burden-free DevOps process, auto-scaling, and a pay-as-you-go pricing model. This is perfect for any side project because managing a serverless infrastructure directly can become overwhelming.

- The Serverless framework automates tasks and deployments across any cloud provider, so you are not restricted to a particular provider.

- Another great reason to use this framework is its wide, rich plugin ecosystem, which allows developers to extend existing capabilities for monitoring or security.

The serverless framework is useful when you are building automation workflows on AWS Lambda (or similar platforms), APIs, or automation workflows, or when you need a strong ecosystem for functions-as-a-service (FaaS) that offers a basic boilerplate.

Check out Serverless Framework 🔥

Jozu - The DevOps Platform for AI Applications

Jozu is designed to manage your AI/ML projects. This tool hosts ModelKits, which are basically a packaging format to pack components, code, datasets or configurations of your projects into a single package. Now, these modelkits can be deployed as a package on the cloud.

Jozu's main aim is to manage and deploy your AI/ML projects through ModelKits, and it works hand-in-hand with KitOps to create and deploy ModelKits.

For example, you can use KitOps CLI to create a ModelKit and push it to Jozu:

# Initialize a new ModelKit

kit init my-modelkit

# Add files to the ModelKit (e.g., datasets, code, configurations)

cp my-dataset.csv my-modelkit/

cp my-model.py my-modelkit/

# Push the ModelKit to Jozu Hub

kit push jozu.ml/my-organization/my-modelkit:latest

Then, using Jozu, you can generate Docker containers for your ModelKit, which you can deploy locally or in a Kubernetes cluster:

# Pull the ModelKit container

docker pull jozu.ml/my-organization/my-modelkit:latest

# Run the container locally

docker run -it --rm -p 8000:8000 jozu.ml/my-organization/my-modelkit:latest

Jozu is a tool that would benefit your ML/AI projects; here are some amazing things it offers you should be on the lookout for:

- Jozu lets you have control over your deployed projects compared to other public registries.

- When you update a file or code in your ModelKit, Jozu makes it easy for you and your team to keep track of which version of the ModelKit has been changed and what changes have been made. You can easily roll back if the current version has an issue or an error.

- Jozu makes it easy to audit your AI/ML projects by saving each version as an unchangeable ModelKit. You or your team can revisit it at any time and get exact data.

With Jozu, you don’t need to stress over how to deploy or update your AI/ML project.

This tool is perfect for machine learning developers or companies that need a simple, secure, and scalable AI/ML workflow for their enterprise deployment needs.

ClaudiaJS - A Serverless JavaScript

ClaudiaJS is another option for deploying your serverless application. This tool is great for JavaScript developers who want to experiment with a serverless environment by deploying Node.js projects on AWS Lambda.

This tool works by packaging and managing the dependency of the NodeJS project to upload the code to AWS using a single command. It also sets up configurations like security roles and API gateways to help the deployment process.

{

"scripts": {

"deploy": "claudia create --region us-east-1 --api-module api",

"update": "claudia update"

}

You can also add a pipeline step for production release using Claudia:

{

"scripts": {

"release": "claudia set-version --version production"

}

}

You can also build CI/CD pipelines using ClaudiaJS to automate deployments:

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Install dependencies

run: npm install

- name: Deploy to AWS Lambda

run: npm run deploy

Something great about ClaudiaJS is that it uses standard NPM packaging conventions that JavaScript developers are already familiar with. It also manages multiple versions of the uploaded project on AWS Lambda for production, development, and testing environments.

Why use ClaudiaJS?

- ClaudiaJS removes the setup process for AWS Lambda and API gateway and lets developers focus more on building the project rather than pipeline workflows.

- It is lightweight and does not need any runtime dependencies, making it easy to integrate into existing projects.

ClaudiaJS gives you a creative process for deploying your serverless applications, making it seamless so you can easily deploy any NodeJS project.

As your serverless application is up and running on the cloud, you would need to automate your workflow and monitor it for possible downtime or software failure to ensure it runs smoothly.

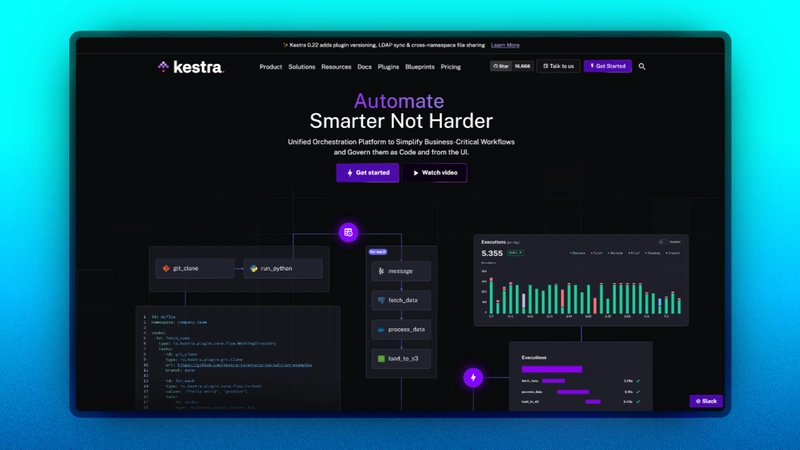

Kestra - The Open Source Orchestration Platform

Kestra manages processes and workflows in your cloud-native environments, such as data workflows or automation needed to run your application.

This tool offers an easy approach to managing YAML configuration or integrating other tools or platforms.

With Kestra, you can define a workflow and manage pipelines for your serverless applications:

const workflow = {

id: "simple-workflow",

namespace: "tutorial",

tasks: [

{

id: "extract-data",

type: "io.kestra.plugin.core.http.Download",

uri: "https://example.com/data.json",

},

{

id: "transform-data",

type: "io.kestra.plugin.scripts.python.Script",

containerImage: "python:3.11-alpine",

inputFiles: {

"data.json": "{{ outputs.extract-data.uri }}",

},

script: `

import json

with open("data.json", "r") as file:

data = json.load(file)

transformed_data = [{"key": item["key"], "value": item["value"]} for item in data]

with open("transformed.json", "w") as file:

json.dump(transformed_data, file)

`,

outputFiles: ["*.json"],

},

{

id: "upload-data",

type: "io.kestra.plugin.aws.s3.Upload",

accessKeyId: "{{ secret('AWS_ACCESS_KEY_ID') }}",

secretKeyId: "{{ secret('AWS_SECRET_KEY_ID') }}",

region: "us-east-1",

bucket: "my-bucket",

key: "transformed-data.json",

from: "{{ outputs.transform-data.outputFiles['transformed.json'] }}",

},

],

};

export default workflow;

You can also build an ETL (Extract, Transform, and Load) pipeline. This pipeline collects data from different sources, transforms it into your specific format, and loads it into a system of your choice, such as a database.

const etlPipeline = {

id: "etl-pipeline",

namespace: "company.team",

tasks: [

{

id: "download-orders",

type: "io.kestra.plugin.core.http.Download",

uri: "https://example.com/orders.csv",

},

{

id: "download-products",

type: "io.kestra.plugin.core.http.Download",

uri: "https://example.com/products.csv",

},

{

id: "join-data",

type: "io.kestra.plugin.jdbc.duckdb.Query",

inputFiles: {

"orders.csv": "{{ outputs.download-orders.uri }}",

"products.csv": "{{ outputs.download-products.uri }}",

},

sql: `

SELECT o.order_id, o.product_id, p.product_name

FROM read_csv_auto('{{ workingDir }}/orders.csv') o

JOIN read_csv_auto('{{ workingDir }}/products.csv') p

ON o.product_id = p.product_id

`,

store: true,

},

{

id: "upload-joined-data",

type: "io.kestra.plugin.aws.s3.Upload",

accessKeyId: "{{ secret('AWS_ACCESS_KEY_ID') }}",

secretKeyId: "{{ secret('AWS_SECRET_KEY_ID') }}",

region: "us-east-1",

bucket: "my-bucket",

key: "joined-data.csv",

from: "{{ outputs.join-data.uri }}",

},

],

};

export default etlPipeline;

Why use Kestra?

- Most applications depend on data pipelines or automation workflows to run smoothly, and managing such workflows on a large scale can be overwhelming. Kestra solves this problem by providing a scalable engine that can be integrated with any cloud service or database.

- Unlike traditional tools like Apache Airflow, Kestra is built for any modern, containerized environment with built-in observability.

Kestra is most needed when managing complex data pipelines or when implementing cloud-native workflow automation with Kubernetes-ready deployment. This tool is a great alternative to Apache Airflow.

Conclusion

Over the last few years, the cloud-native and serverless space has become more flexible and modular.

We no longer need to settle for complex tools when there are tools and frameworks that make it super easy, such as Encore, ClaudiaJS, and the Serverless framework, and tools like StackQL, Jozu, Kestra, and Pulumi.

These tools not only save time and tears, but they also give us unique creative control over how we build the infrastructure we build and use.

Know of other must-have tools? Drop them in the comments—I’d love to hear your recommendations!

Also, follow me For More Content like this:

For Paid collaboration, mail me at: [email protected].

Thank you for Reading!