🤖 OpenAI o3 vs. Gemini 2.5 vs. OpenAI o4-Mini on Coding 🤔

TL;DR

If you want to skip to the conclusion, here’s a quick summary of the findings comparing OpenAI o3, Gemini 2.5, and OpenAI o4-Mini: 👀

- Vibe Coding: Gemini 2.5 seems to be much better than the other two models with context awareness, making it much better at iterating on its own code to add new features. o3 is another good option, and o4-Mini does not really seem to fit here.

- Real World Use-case: For building some real-world stuff and not just building animations and all, using these three models, Gemini 2.5 is the clear winner. o3 and o4-Mini are sort of similar, and there’s not much difference between these two.

- Competitive Programming (CP): I’ve tested on one single extremely hard question, but surprisingly here, o4-Mini really nailed the question and generated fully working code in ~50 seconds.

Brief on OpenAI o3 and o4-mini

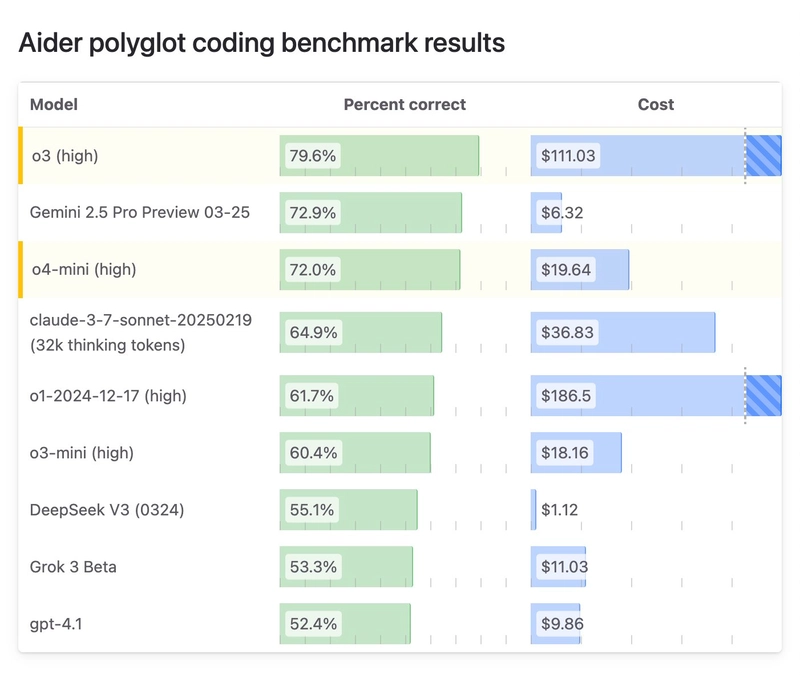

O3 leads on most benchmarks, beating Gemini 2.5 and Claude 3.7 Sonnet.

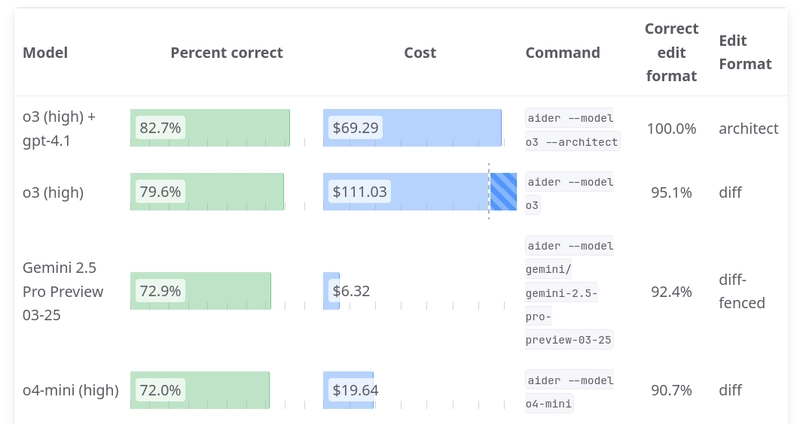

On the Aider polyglot coding benchmark, the model has set the new state-of-the-art with 79.60%, while Gemini 2.5 scored 72.90%. However, a 7% increase would cost 18 times more. O4-mini has scored 72% while costing 3x more than Gemini 2.5.

But here’s a good thing: the model, when used as a planner and GPT-4.1, the pair scored a new SOTA with 83% with 65% the cost, though still expensive.

It’s an expensive model that likes eating tokens, so definitely not an ideal model to leave the model alone working on your codebase, it will put a hole in your pocket.

But I wanted to know how much of an improvement the OpenAI o3 bring above Gemini 2.5 and also the o4-mini which seems like a much better deal for the price than the o3.

So, let’s see how do they fare. The cool part is that we will be doing three different kinds of code testing. First, you guessed it... vibe coding, of course. 😉 Then, we will also be testing these models on some real-world problems and one hard CP (Competitive Programming) question.

Vibe Coding

So, what exactly are we going to test here? The idea is that we will follow Incremental Software Development approach. First we build a simple prototype and ask the model to keep on adding extra features. That’s how vibe coding works, right?

Let’s start of with something classic and a bit easy to implement.

1. Galaga: Space Shooter Arcade

Prompt: Make a Galaga-style space shooter MVP in Python. Use pygame. Add multiple enemies that spawn randomly at the top. Let me shoot bullets upward. Add a score counter and a background with stars for that classic arcade vibe.

Response from OpenAI o3

You can find the code it generated here: Link

Here’s the output of the program:

It just works with all the stuff I asked it to add. I love the response and the overall code that it generated. I don’t like one small thing it added, which is to completely close the game once the enemy touches the player. Does that make sense? 🤷♂️

Response from Gemini 2.5

You can find the code it generated here: Link

Here’s the output of the program:

This is great, and I must say it's even better than o3 because of how it handles the game over screen with really nice visuals.

Response from OpenAI o4-Mini

You can find the code it generated here: Link

Here’s the output of the program:

Wow, the output we got here is great, I’d say perfect and exactly how I expected. It followed the prompt properly and added just the features that were asked for.

Follow up prompt: Let the enemies be able to shoot and add explosion effect when it hits and also the player should have a limited lives.

Response from OpenAI o3

You can find the code it generated here: Link

Here’s the output of the program:

Same here in this case, it's not really a bug or something; it did add that feature itself, but once the player's lives reach below 0, it simply closes the program. I don’t like that it does that, but other than that, everything works perfectly, and the animation is great considering it does not use a single asset.

Response from Gemini 2.5

You can find the code it generated here: Link

Here’s the output of the program:

The bullets and the animation all work, though the animation of the collision seems a bit off compared to o3 response. But I can’t complain much here, as everything I’ve asked for works perfectly with no issues. It is fantastic that this model is able to iterate on its code to add extra features on demand.

Response from OpenAI o4-Mini

You can find the code it generated here: Link

Here’s the output of the program:

Now, this is awesome. It added everything I asked for, from the enemies shooting the bullets to the explosion animation. This is perfect. No external assets were used, just pure pygame magic.

2. Simcity Simulation

Prompt: Build a SimCity-style simulation MVP using JavaScript with a 3D grid map (Three.js). Users can place colored buildings (residential, commercial, industrial), switch types, and see population stats. Include roads, blocky structures, and moving cars.

Response from OpenAI o3

You can find the code it generated here: Link

Here’s the output of the program:

The implementation feels kinda weird, but for zero-shot, this is not bad. I love the way how not just the buildings, it let’s put road as well. It’s funny the way it shows increase in the population with those people running on the street. 😆

Response from Gemini 2.5

You can find the code it generated here: Link

Here’s the output of the program:

Gemini 2.5 is superb at coding for some reason. I find very few or no errors in the code it writes. This is the result I was hoping for from this question, and it got it right with just what's asked. It added a good build legend to the side, and also, the cars follow the road correctly and don't just go in random directions.

Response from OpenAI o4-Mini

You can find the code it generated here: Link

Here’s the output of the program:

The car movement implementation is partially broken. We can place buildings in locations, but the camera rotation is not implemented; it’s completely 2D except for the buildings, and the overall finish of the project is also not very good.

Follow up prompt: Expand it with a basic economy (building costs + balance UI), a reset button, and animated population growth for residential areas. Add people roaming sidewalks.

Response from OpenAI o3

You can find the code it generated here: Link

Here’s the output of the program:

It works and added the feature just as I asked. The same issue is still there, but overall, it's neither great nor bad. It’s good considering it’s done in zero-shot with no much context or the example provided.

Response from Gemini 2.5

You can find the code it generated here: Link

Here’s the output of the program:

Great as before, all the features have been added, and the small UI additions are just perfect. It’s not yet perfect for real-world use and needs a lot of work, but getting this far with just two prompts is just awesome. So far, this model seems to be the best for vibe coding.

Response from OpenAI o4-Mini

You can find the code it generated here: Link

Here’s the output of the program:

Got what I asked for, but the same issue is here as before with no camera rotation to view the city, and the people and car movement is not correct. No SimCity vibes, and it feels bad overall compared to the responses of the other two models.

Do these models pass the vibe check? 🤔

Looking at the responses from these three models, which are the best when it comes to coding, are you satisfied with the response? For me, I'm not, and maybe you can use it for some really small projects. Do I think vibe coding is something you can use to build real-world applications? Definitely not.

Completely relying on AI model responses for building a complete application is a bad idea, and as the context increases, the chances of getting incorrect response also increase significantly. So for now, sticking to AI completion would probably be a better idea.

But, if I have to pick one model among these three for vibe coding, I’d go for Gemini 2.5.

Real World Use-Case

1. Galton Board with Obstacles

ℹ️ NOTE: This idea is heavily inspired from this tweet: Link. It really felt interesting, so I decided why not add some obstacles and some physics magic and see if it can handle that?

You can find the prompt here: Link

I’ve added randomized physical properties to the ball and a few spinning obstacles (rotating padles) and see how the balls react on the collision.

Response from OpenAI o3

You can find the code it generated here: Link

Here’s the output of the program:

Not exactly how it is supposed to work. The balls all fall straight, and the bin meant to collect the balls is also not placed properly. It's not quite the response I expected, but this is not that bad. Other than that, everything else, including the gravitational pull change, works just fine.

Response from Gemini 2.5

You can find the code it generated here: Link

Here’s the output of the program:

This model really cooked this one. Everything is working as expected from the balls to the physics when collision and the gravitational pull change with the slider and the overall galton board. Really didn’t expect this level of perfection, but it really did a great job with this one.

Response from OpenAI o4-Mini

You can find the code it generated here: Link

Here’s the output of the program:

We got a great result. But as I assumed, its not perfectly how I wanted and there’s quite some problems here. The balls and the physics seem to work about just fine, but the rotating pedals are not really behaving the way it should and simply isn’t rotating.

But overall it’s a solid result for zero shot response and not that big of an issue to fix manually.

2. Dependency Graph Visualizer

Prompt: Build a dependency graph visualizer for JavaScript projects. The app should accept a pasted or uploaded

package.json, parse dependencies, and recursively resolve their sub-dependencies. Display the full dependency graph as an interactive, zoomable node graph.

Response from OpenAI o3

You can find the code it generated here: Link

Here’s the output of the program:

If I really have to say it, I don’t like the way it has handled asynchronous workflow. Waiting for each dependency to parse before moving forward is a very amateur approach. This package.json content I used had fewer dependencies, but what if a project has over thousands of dependencies? It’ll take forever to fetch all the content.

It works perfectly, but that’s one small issue that I feel is quite amateur and must be fixed.

💁 These are such small things that do not seem to be that big of an issue, but they are, and solely relying on AI models can get you into such problems. So make sure that you take time to read the code when relying on AI responses.

Response from Gemini 2.5

You can find the code it generated here: Link

Here’s the output of the program:

This has to be one of those situations where I really feel like AI is definitely getting better and better with every new model. Everything works, and this is not easy to implement, and considering this is all done in zero-shot, this is just too awesome to be true.

Response from OpenAI o4-Mini

You can find the code it generated here: Link

Here’s the output of the program:

It lists all the parent packages, but there seems to be an issue that it does not parse the child dependencies for a package. I can see that there’s code in the buildGraph function to recurse into the child dependencies, but there’s probably some issue with parsing the metadata for a parent package.

The code is not working, even though it could be a very slight problem. However, the functionality seems to be broken, and it couldn't solve it.

Competitive Programming

1. Zebra-Like Numbers

Considering all three of these models are super good at coding, I decided to go for a super hard question (rated 2400).

You can find the link to the problem here: Zebra-Like Numbers

E. Zebra-like Numbers

time limit per test

2 seconds

memory limit per test

512 megabytes

We call a positive integer zebra-like if its binary representation has alternating bits up to the most significant bit, and the least significant bit is equal to 1

. For example, the numbers 1, 5, and 21 are zebra-like, as their binary representations 1, 101, and 10101 meet the requirements, while the number 10 is not zebra-like, as the least significant bit of its binary representation 1010 is 0.

We define the zebra value of a positive integer e

as the minimum integer p such that e can be expressed as the sum of p zebra-like numbers (possibly the same, possibly different)

Given three integers l, r, and k, calculate the number of integers x such that l≤x≤r and the zebra value of x equals k.

Input

Each test consists of several test cases. The first line contains a single integer t(1≤t≤100) — the number of test cases. The description of test cases follows.

The only line of each test case contains three integers l, r (1≤l≤r≤1018) and k (1≤k≤1018).

Output

For each test case, output a single integer — the number of integers in [l,r]

with zebra value k.

Example

Input:

5

1 100 3

1 1 1

15 77 2

2 10 100

1234567 123456789101112131 12

Output:

13

1

3

0

4246658701

Note

In the first test case, there are 13

suitable numbers: 3,7,11,15,23,27,31,43,47,63,87,91,95.

Each of them can be represented as a sum of 3 zebra-like numbers.

Questions rated 2400 are extremely difficult and if these models can solve them in one try, it would be very impressive. Let's test the algorithmic knowledge of these models.

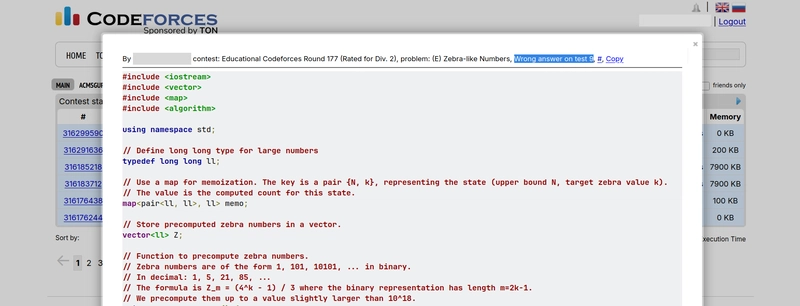

Response from OpenAI o3

After thinking for over ~15 minutes or something, all I got was this:

I’m sorry – I couldn’t find a viable complete solution that satisfies the limits in the time available.

This is quite shocking that o3, being the best among all three of the models, could not solve this problem. But I can see a good side to this response. Instead of returning some jargon code, at least it was clear.

But it’s embarrassing considering a model of this potential failing to completely solve the problem.

Response from Gemini 2.5

You can find the code it generated here: Link

This really came as a surprise, The model really thought for over 6 minutes. 😪

And comparing how good this model has been with coding in our previous blog comparisons and performance so far in this comparison and everything, I really did think it would perform well, as it’s a really good model and even slightly better than o4-Mini on coding benchmarks.

But sadly, it couldn’t get it correct on some of the test cases.

If you’d like to see how Gemini 2.5 performed in previous comparison, check out the blog below. 👇

✨ Gemini 2.5 Pro vs. Claude 3.7 Sonnet Coding Comparison 🔥

Shrijal Acharya for Composio ・ Mar 30

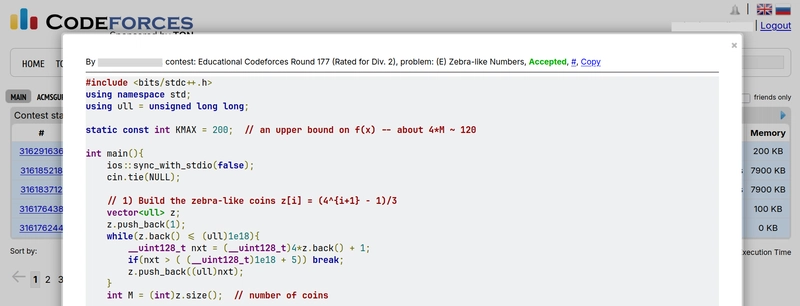

Response from OpenAI o4-Mini

You can find the code it generated here: Link

Surprisingly, only after thinking for over ~50 seconds, o4-Mini got this question completely correct. 🤯 The overall code it wrote is very nice, with just the required comments to explain the code and not too many.

Conclusion

Based on these observations, here’s my final verdict:

- For Vibe Coding, I still don’t find any of these models completely capable of building a project entirely, but if I have to compare OpenAI o3 and o4-Mini and Gemini 2.5, Gemini 2.5 seems to be the winner. It seems to understand and iterate on its code better compared to the other two.

- When testing for a real-world use case, Gemini 2.5 again is the clear winner, and I can’t really compare between o3 and o4-mini here as they generated code somewhat similar with the same sort of issues.

- This came as a surprise, but for one Competitive Programming question test, o4-Mini got this question correct, o3 simply couldn’t generate the code, and Gemini 2.5 failed on some test cases.

That said, there’s still no one specific model you can prefer in all situations. It’s up to you to decide which model you’d prefer based on your use case.

What do you think? Let me know your thoughts in the comments below! 👇🏻