Everything about AI Function Calling and MCP, the keyword for Agentic AI

Summary

Making an Agentic AI framework specialized in AI Function Calling, we've learned a lot about function calling. This article describes everything we've discovered about function calling during our framework development.

- Github Repository: https://github.com/wrtnlabs/agentica

- Homepage: https://wrtnlabs.io/agentica

Main story of this article:

- In 2023, when OpenAI announced AI function calling, many people predicted it would conquer the world. However, function calling couldn't deliver on this promise and was overshadowed by agent workflow approaches.

- We identified the reason: a lack of understanding about JSON schema and insufficient compiler-level support. Agent frameworks should strengthen fundamental computer science principles rather than focusing on fancy techniques.

- OpenAPI, which is much older and more widely adopted than MCP, can accomplish the same goals when a library developer has strong JSON schema understanding and compiler skills.

- Function calling can improve performance while reducing token costs by using our orchestration strategy, even with small models like

gpt-4o-mini(8b).- Let's make function calling great again with Agentica

1. Preface

In 2023, OpenAI announced AI Function Calling.

At that time, many AI researchers predicted it would revolutionize the industry. Even in my own network, numerous developers were rushing to build new AI applications centered around function calling.

The application market will be reorganized around AI chatbots.

Developers will only create or gather API functions, and AI will automatically call them. There will be no need to create complex frontend/client applications anymore.

Do everything with chat.

But what about today? Has function calling conquered the world? No—rather than function calling, agent workflow-driven development has become dominant in the AI development ecosystem. langchain is perhaps the most representative framework in this workflow ecosystem.

Currently, many AI developers claim that AI function calling is challenging to implement, consumes too many tokens, and causes frequent hallucinations. They argue that for function calling to gain widespread adoption, AI models must become much cheaper, larger, and smarter.

However, we have a different perspective. Today's LLMs are already capable enough, even smaller 8b-sized models (which can run on personal laptops). The reason function calling hasn't gained widespread adoption is that AI framework developers haven't been able to build function schemas accurately and effectively.

They haven't focused enough on fundamentals like computer science, instead relying excessively on flashy techniques.

2. Concepts

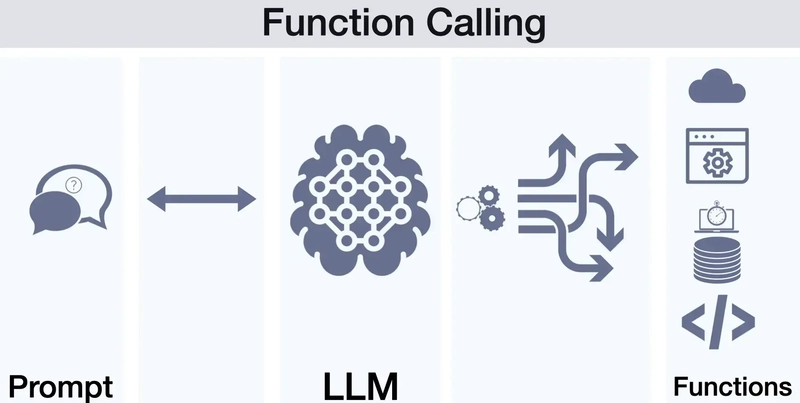

2.1. AI Function Calling

Function calling enables AI models to interact with external systems by identifying when a function should be called and generating the appropriate JSON to call that function.

Instead of generating free-form text, the model recognizes when to invoke specific functions based on user requests and provides structured data for those function calls.

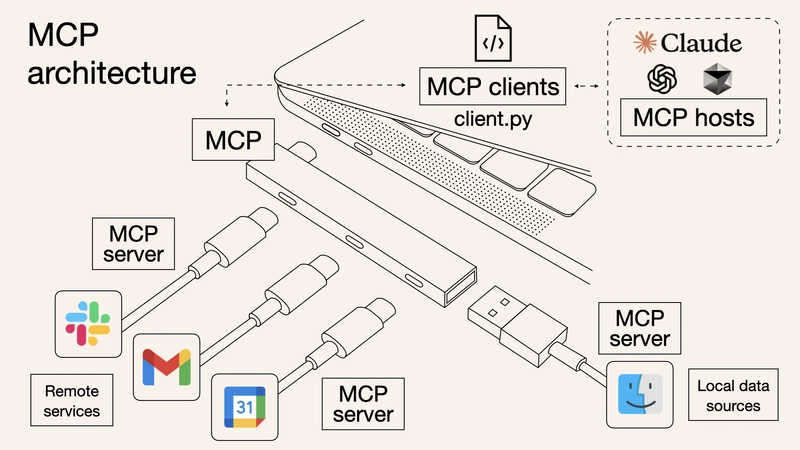

2.2. Model Context Protocol

The Model Context Protocol is an open standard designed to facilitate secure, two-way connections between AI tools and external data sources. It aims to streamline the integration of AI assistants with various tools and data, allowing for more dynamic interactions.

MCP standardizes how AI applications, such as chatbots, interact with external systems, enabling them to access and utilize data more effectively.

It allows for flexible and scalable integrations, making it easier for developers to build applications that can adapt to different data sources and tools.

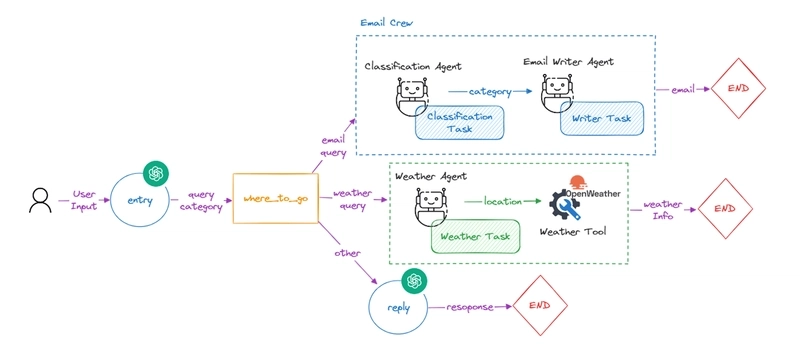

2.3. Agent Workflow

An Agent Workflow is a structured approach to AI agent operation where multiple specialized AI agents work collaboratively to accomplish complex tasks. Each agent in the workflow has specific responsibilities and expertise, creating a pipeline of processes that handle different aspects of a larger task.

By the way, agent workflow is primarily designed for specific-purpose applications rather than creating general-purpose agents. Agent workflows excel at solving well-defined problems with clear boundaries where the task can be decomposed into specific steps requiring different expertise. They're not typically intended to create a single "do-everything" agent.

Workflow-driven development is the current trend in AI development, but it is impossible to achieve Agentic AI with this method. It is an alternative that emerged when Function Calling did not work as well as expected.

3. Function Schema

3.1. JSON Schema Specification

{

name: "divide",

arguments: {

x: {

type: "number",

},

y: {

anyOf: [ // not supported in Gemini

{

type: "number",

exclusiveMaximum: 0, // not supported in OpenAI and Gemini

},

{

type: "number",

exclusiveMinimum: 0, // not supported in OpenAI and Gemini

},

],

},

},

description: "Calculate x / y",

}

You might have seen reports like this in AI communities:

I made a function schema working on OpenAI GPT, but it doesn't work on Google Gemini. Also, some of my function schemas work properly on Claude, but OpenAI throws an exception saying it's an invalid schema.

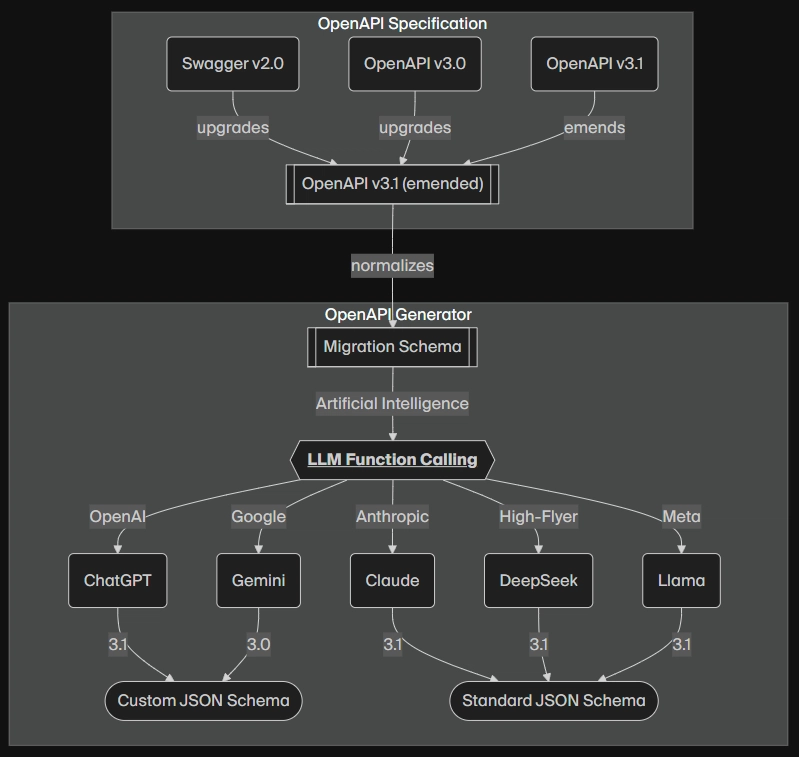

In reality, JSON schema specifications differ between AI vendors, and some don't fully support the standard JSON schema specification. For example, OpenAI doesn't support constraint properties like minimum and format: uuid, while Gemini doesn't even support types like $ref and anyOf.

Specifically, OpenAI and Gemini have their own custom JSON schema specifications that differ from the standard, while Claude fully supports JSON Schema 2020-12 draft. Other models like DeepSeek and Llama don't restrict JSON schema specs, allowing the use of standard JSON schema specifications.

Understanding the exact JSON schema specifications is the first step toward advancing from Function Calling to Agentic AI. Here's a list of JSON schema specifications for various AI vendors:

-

@samchon/openapi-

IChatGptSchema.ts: OpenAI ChatGPT -

IClaudeSchema.ts: Anthropic Claude -

IDeepSeekSchema.ts: High-Flyer DeepSeek -

IGeminiSchema.ts: Google Gemini -

ILlamaSchema.ts: Meta Llama

-

3.2. OpenAPI and MCP

import { HttpLlm, IHttpLlmApplication } from "@samchon/openapi";

const app: IHttpLlmApplication<"chatgpt"> = HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then((r) => r.json()),

});

console.log(app);

Converting OpenAPI document to AI function calling schemas

| Type | OpenAPI | MCP |

|---|---|---|

| Age | 15 years | 6 months |

| Ecosystem | Huge | Tiny |

| Participants | Every BE Developers | AI Early Adopters |

OpenAPI documentation provides a well-structured specification for API functions and has been standardized and stable since 2010. However, it hasn't gained widespread adoption in the AI function calling ecosystem. Meanwhile, MCP (Model Context Protocol), despite being a newer protocol, has become widely adopted.

In terms of ecosystem, OpenAPI has a much larger number of documents than MCP, and its documentation quality is superior due to its long-standing acceptance as a standard among backend developers. So why hasn't OpenAPI been widely embraced in the AI ecosystem like MCP? We think the reason is the same as discussed in the previous chapter: the exact schema problem.

As mentioned, JSON schema specifications differ between AI vendors. Additionally, each OpenAPI version has its own distinct JSON schema specification. This, in our view, is why OpenAPI hasn't been widely accepted in the function calling ecosystem. People barely understand the JSON schema specifications for each AI vendor, so how could they be expected to convert version-specific OpenAPI documents into JSON schemas for different AI vendors? In contrast, while MCP may have been promoted by Anthropic Claude, a major force in the AI ecosystem, its specifications are concise and don't require a separate conversion process. That is the fundamental reason.

We think that MCP is a well-structured protocol, but we also think there's no need to migrate from OpenAPI to MCP for function calling. OpenAPI can accomplish the same goals as MCP, so we recommend leveraging the existing OpenAPI ecosystem.

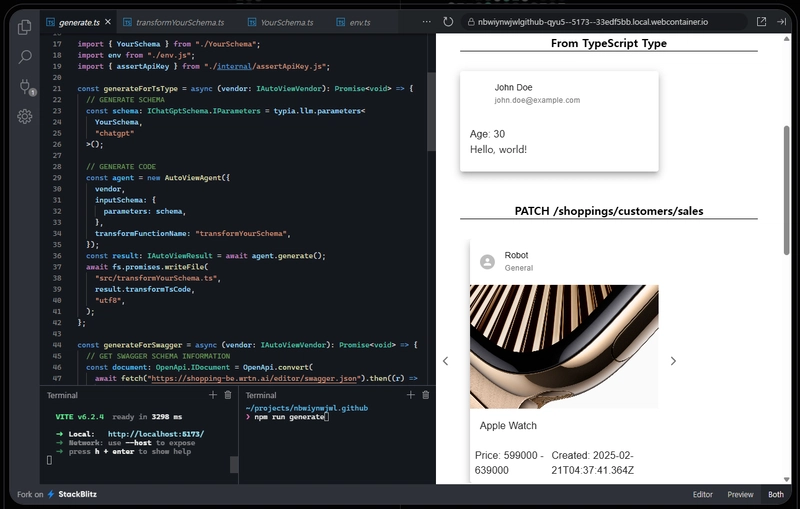

To support MCP-like function calling from OpenAPI documents, we're converting Swagger/OpenAPI documents to an emended OpenAPI v3.1 specification, removing ambiguous and duplicated expressions. We then transform this to a specific AI vendor's function schema by using a migration schema that aligns with functional structure.

Below is demonstration code that converts a shopping mall's OpenAPI document to a function schema, plus a video showing it running as an actual AI agent. Simply by importing the swagger.json file from an existing shopping mall server, you can search for products through chat, purchase them, manage delivery, apply discount coupons, or process refunds.

Understanding, supporting, and utilizing JSON schema is what function calling is all about.

import { Agentica, assertHttpLlmApplication } from "@agentica/core";

import OpenAI from "openai";

const agent = new Agentica({

model: "chatgpt",

vendor: {

model: "gpt-4o-mini",

api: new OpenAI({ apiKey: "********" }),

},

controllers: [

{

protocol: "http",

name: "shopping",

application: assertHttpLlmApplication({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then((res) => res.json()),

}),

connection: {

host: "https://shopping-be.wrtn.ai",

headers: {

Authorization: "Bearer ********",

},

},

}

],

});

await agent.convert("I wanna buy a Macbook.");

3.3. Compiler Driven Development

import { ILlmApplication } from "@samchon/openapi";

import typia, { tags } from "typia";

const app: ILlmApplication<"chatgpt"> =

typia.llm.application<BbsArticleService, "chatgpt">();

console.log(app);

class BbsArticleService {

/**

* Update an article.

*/

update(props: {

/**

* Target article's {@link IBbsArticle.id}.

*/

id: string & tags.Format<"uuid">;

/**

* New content to update.

*/

input: IBbsArticle.IUpdate;

}): void;

}

interface IBbsArticle { ... }

Understanding the exact JSON schema specifications and their conversion logic for various AI vendors is primarily a requirement for library or framework developers. A good library is one where users can invoke AI functions simply by providing a function or class, without needing to know the complex details of JSON schema specifications.

To make AI function calling convenient for users and to realize the potential of Agentic AI, function schemas must be built by compilers. If AI function schemas cannot be generated programmatically, function calling will inevitably be overshadowed by workflow agent approaches.

| Type | Value |

|---|---|

| Number of Functions | 289 |

| LOC of source code | 37,752 |

| LOC of function schema | 212,069 |

| Compiler Success Rate | 100.00000 % |

| Human Success Rate | 0.00002 % |

For example, in the shopping mall backend demonstration from the previous section, the lines of code (LOC) for the AI function schema is 5.62 times larger than the source code. If users were required to write AI function schemas manually, they would need to invest 5.62 times more effort than writing the original source code.

Source code development is aided by compilers and IDEs, but JSON schema development lacks similar support, making errors much more likely. While a human frontend developer can intuitively work around documentation errors made by backend developers, AI is unforgiving. Even minor mistakes in AI function schemas can break entire AI applications.

AI never forgives schema mistake

<?php

class BbsArticleController {

/**

* @OA\Post(

* path="/boards",

* tags={"BBS"},

* summary="Create a new article",

* description="Create a new article with its first snapshot",

* @OA\RequestBody(

* description="Article information to create",

* required=true,

* @OA\MediaType(

* mediaType="application/json",

* @OA\Schema(

* @OA\Property(

* property="title",

* type="string",

* description="Title of article",

* ),

* @OA\Property(

* property="content",

* type="string",

* description="Content body of article"

* ),

* @QA\Property(

* property="files",

* type="array",

* @QA\Items(

* @QA\Schema(

* @QA\Property(

* property="name",

* type="string",

* maxLength=255,

* description="File name, except the extension"

* ),

* @QA\Property(

* property="extension",

* type="string",

* nullable=true,

* maxLength=8,

* description="File extension. If no extension, then set null"

* ),

* @QA\Property(

* property="url",

* type="string",

* format="url",

* description="URL address that the file is located in"

* )

* )

* )

* )

* )

* )

* ),

* @OA\Response(response="200", description="Success"),

* @OA\Response(response="400", description="Fail")

* )

*/

public function create(Request $request);

}

?>

Therefore, AI function schemas must be constructed by compilers.

If you're planning to convert a backend server to an AI agent using function calling, avoid languages and frameworks that require developers to manually write JSON schemas, such as PHP Laravel and Java Spring RestDocs.

Instead, use compiler-driven (or reflection-based) OpenAPI document generators like Nestia or FastAPI. Then convert the compiler-generated OpenAPI to a specific AI function schema using the @samchon/openapi library, as shown below.

Never write AI function schemas by hand.

import { HttpLlm, IHttpLlmApplication } from "@samchon/openapi";

const app: IHttpLlmApplication = HttpLlm.application({

model: "claude",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then((r) => r.json()),

});

3.4. Document Driven Development

Function calling driven AI agent development can help developers escape from the difficulty and inflexibility of Agent workflow development. Instead, developers must concentrate on providing thorough documentation for each function.

Looking at many other AI developers who claim Function Calling and MCP are not working as expected, we observe they often don't put sufficient effort into documentation, which leads to function calling failures even with just a few functions.

In contrast, our shopping mall agent demonstration includes 289 API functions and works properly without any significant problems. The difference stems from the level of effort put into documentation.

Here is an example of the documentation in the shopping mall project:

export function ShoppingSaleController<Actor extends IShoppingActorEntity>(

props: IShoppingControllerProps,

) {

@Controller(`shoppings/${props.path}/sales`)

abstract class ShoppingSaleController {

/**

* List up every summarized sales.

*

* List up every {@link IShoppingSale.ISummary summarized sales}.

*

* As you can see, returned sales are summarized, not detailed. It does not

* contain the SKU (Stock Keeping Unit) information represented by the

* {@link IShoppingSaleUnitOption} and {@link IShoppingSaleUnitStock} types.

* If you want to get such detailed information of a sale, use

* `GET /shoppings/customers/sales/{id}` operation for each sale.

*

* > If you're an A.I. chatbot, and the user wants to buy or compose

* > {@link IShoppingCartCommodity shopping cart} from a sale, please

* > call the `GET /shoppings/customers/sales/{id}` operation at least once

* > to the target sale to get detailed SKU information about the sale.

* > It needs to be run at least once for the next steps.

*

* @param input Request info of pagination, searching and sorting

* @returns Paginated sales with summarized information

* @tag Sale

*

* @author Samchon

*/

@TypedRoute.Patch()

public index(

@props.AuthGuard() actor: Actor,

@TypedBody() input: IShoppingSale.IRequest,

): Promise<IPage<IShoppingSale.ISummary>>;

/**

* Get a sale with detailed information.

*

* Get a {@link IShoppingSale sale} with detailed information including

* the SKU (Stock Keeping Unit) information represented by the

* {@link IShoppingSaleUnitOption} and {@link IShoppingSaleUnitStock} types.

*

* > If you're an A.I. chatbot, and the user wants to buy or compose a

* > {@link IShoppingCartCommodity shopping cart} from a sale, please call

* > this operation at least once to the target sale to get detailed SKU

* > information about the sale.

* >

* > It needs to be run at least once for the next steps. In other words,

* > if you A.I. agent has called this operation to a specific sale, you

* > don't need to call this operation again for the same sale.

* >

* > Additionally, please do not summarize the SKU information. Just show

* > the every options and stocks in the sale with detailed information.

*

* @param id Target sale's {@link IShoppingSale.id}

* @returns Detailed sale information

* @tag Sale

*

* @author Samchon

*/

@TypedRoute.Get(":id")

public at(

@props.AuthGuard() actor: Actor,

@TypedParam("id") id: string & tags.Format<"uuid">,

): Promise<IShoppingSale>;

}

return ShoppingSaleController;

}

When writing descriptions for functions, you need to detail each function's purpose clearly and thoroughly. If there are relationships between functions, such as prerequisites, you must describe these as well.

/**

* Final component information on units for sale.

*

* `IShoppingSaleUnitStock` is a subsidiary entity of {@link IShoppingSaleUnit}

* that represents a product catalog for sale, and is a kind of final stock that is

* constructed by selecting all {@link IShoppingSaleUnitSelectableOption options}

* (variable "select" type) and their

* {@link IShoppingSaleUnitOptionCandidate candidate} values in the belonging unit.

* It is the "good" itself that customers actually purchase.

*

* - Product Name) MacBook

* - Options

* - CPU: { i3, i5, i7, i9 }

* - RAM: { 8GB, 16GB, 32GB, 64GB, 96GB }

* - SSD: { 256GB, 512GB, 1TB }

* - Number of final stocks: 4 * 5 * 3 = 60

*

* For reference, the total number of `IShoppingSaleUnitStock` records in an

* attribution unit can be obtained using Cartesian Product. In other words, the

* value obtained by multiplying all the candidate values that each

* (variable "select" type) option can have by the number of cases is the total

* number of final stocks in the unit.

*

* Of course, without a single variable "select" type option, the final stocks

* count in the unit is only 1.

*

* @author Samchon

*/

export interface IShoppingSaleUnitStock { ... }

When describing a type, write it as an ERD (Entity Relationship Diagram) description.

import { tags } from "typia";

/**

* Restriction information of the coupon.

*

* @author Samchon

*/

export interface IShoppingCouponRestriction {

/**

* Access level of coupon.

*

* - public: possible to find from public API

* - private: unable to find from public API

* - arbitrarily assigned by the seller or administrator

* - issued from one-time link

*/

access: "public" | "private";

/**

* Exclusivity or not.

*

* An exclusive discount coupon refers to a discount coupon that has an

* exclusive relationship with other discount coupons and can only be

* used alone. That is, when an exclusive discount coupon is used, no

* other discount coupon can be used for the same

* {@link IShoppingOrder order} or {@link IShoppingOrderGood good}.

*

* Please note that this exclusive attribute is a very different concept

* from multiplicative, which means whether the same coupon can be

* multiplied and applied to multiple coupons of the same order, so

* please do not confuse them.

*/

exclusive: boolean;

/**

* Limited quantity issued.

*

* If there is a limit to the quantity issued, it becomes impossible

* to issue tickets exceeding this value.

*

* In other words, the concept of N coupons being issued on

* a first-come, first-served basis is created.

*/

volume: null | (number & tags.Type<"uint32">);

/**

* Limited quantity issued per person.

*

* As a limit to the total amount of issuance per person, it is

* common to assign 1 to limit duplicate issuance to the same citizen,

* or to use the NULL value to set no limit.

*

* Of course, by assigning a value of N, the total amount issued

* to the same citizen can be limited.

*/

volume_per_citizen: null | (number & tags.Type<"uint32">);

/**

* Expiration day(s) value.

*

* The concept of expiring N days after a discount coupon ticket is issued.

*

* Therefore, customers must use the ticket within N days, if possible,

* from the time it is issued.

*/

expired_in: null | (number & tags.Type<"uint32">);

/**

* Expiration date.

*

* A concept that expires after YYYY-MM-DD after a discount coupon ticket

* is issued.

*

* Double restrictions are possible with expired_in, of which the one

* with the shorter expiration date is used.

*/

expired_at: null | (string & tags.Format<"date-time">);

}

Don't forget to describe properties.

4. Orchestration Strategy

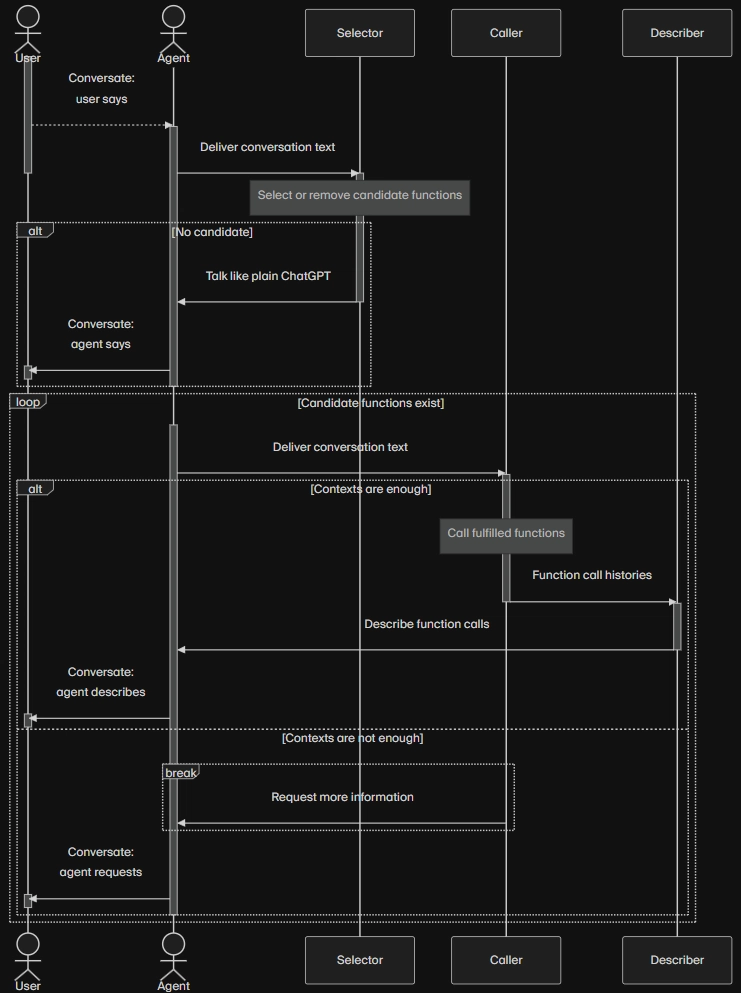

4.1. Selector, determine candidate functions to call

Filter candidate functions to reduce context.

If you take the Github MCP server to Claude Desktop, it would likely break in many cases. This is because the Github MCP server has 30 functions, and Claude Desktop directly lists all these functions to the agent at once. Too many functions create too much context, leading to hallucinations.

To prevent such hallucinations, we've created a selector agent that selects candidate functions to call based on each function's name and description. Only the candidate functions selected by the selector agent are presented to the main agent, ensuring that the function context doesn't become overwhelming as in the Claude Desktop case.

When selecting candidate functions, the selector agent also provides reasons for its selections. Additionally, since the selector agent can remove candidate functions that are no longer needed, the list of candidate functions typically doesn't exceed four.

Github MCP too much a lot break down

Agentica with enhanced function calling works properly

4.2. Caller with Validation feedback

Function calling is not perfect, so validation feedback is essential.

After the selector agent has done its work, the caller agent presents the candidate functions to the main agent for actual function calling. However, the caller agent has an additional responsibility: validation feedback.

Validation feedback is a strategy for correcting incorrectly typed arguments. If the AI agent makes a mistake when composing arguments for a function call, the caller agent provides detailed type error information, allowing the agent to correct the mistake in subsequent attempts.

For example, in the shopping mall agent demonstration, when composing a shopping cart to purchase products using ShoppingCartCommodity, the AI agent has approximately a 60% error rate. Without validation feedback, function-calling driven AI agents cannot operate reliably.

| Name | Status |

|---|---|

ObjectConstraint |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectFunctionSchema |

2️⃣2️⃣4️⃣2️⃣2️⃣2️⃣2️⃣2️⃣5️⃣2️⃣ |

ObjectHierarchical |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣2️⃣1️⃣1️⃣2️⃣ |

ObjectJsonSchema |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectSimple |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectUnionExplicit |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ObjectUnionImplicit |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ShoppingCartCommodity |

1️⃣2️⃣2️⃣3️⃣1️⃣1️⃣4️⃣2️⃣1️⃣2️⃣ |

ShoppingOrderCreate |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ShoppingOrderPublish |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣❌1️⃣1️⃣1️⃣ |

ShoppingSaleDetail |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

ShoppingSalePage |

1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣1️⃣ |

Value 1 means that function calling succeeded without validation feedback.

Other values indicate how many validation feedbacks were required.

4.3. Describer

Describer agent just describes the return value of function calling as markdown content.

If you want to enhance visualization, it is okay to change the return value of function calling to a specific UI component.

Also, if you want to make specific visual components for each function, but you don't have enough time, consider using our another framework AutoView which can generate TypeScript frontend code from schema information.

If you have 100 TypeScript functions, you can make 100 source codes of frontend components. Or if you have 200 API functions, you can make 200 UI components' source code for each API function.

- Github Repository: https://github.com/wrtnlabs/autoview

- Playground Website: https://wrtnlabs.io/autoview

5. Agentica Framework

Agentica is an Agentic AI framework specialized in LLM function calling.

All the knowledge that we've researched since 2023 and shared in this article has been incorporated into the Agentica Framework.

Just list up functions. Then you can make any agent you want. Only by managing the list of functions, you can make your agent flexible, scalable, and highly productive.

- Github Repository: https://github.com/wrtnlabs/agentica

- Homepage: https://wrtnlabs.io/agentica

- Supported Protocols

- TypeScript Class

- Swagger/OpenAPI Document

- MCP

import { Agentica, assertHttpLlmApplication } from "@agentica/core";

import OpenAI from "openai";

import typia from "typia";

const agent = new Agentica({

vendor: {

model: "gpt-4o-mini",

api: new OpenAI({ apiKey: "********" }),

},

controllers: [

// Functions from OpenAPI Document

assertHttpLlmApplication({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

connection: {

host: "https://shopping-be.wrtn.ai",

headers: {

Authorization: "Bearer ********",

},

},

}),

// Functions from TypeScript Classes

{

protocol: "class",

application: typia.llm.application<MobileCamera, "chatgpt">(),

execute: new MobileCamera(),

},

{

protocol: "class",

application: typia.llm.application<MobileFileSystem, "chatgpt">(),

execute: new MobileFileSystem(),

},

],

});

await agent.conversate("I want to write an article.");